Evaluating Classroom Effectiveness

I strive to employ teaching methods that effectively accomplish the learning objectives that I design into each lesson. I draw upon my experiences learning physics when I was an undergraduate - What worked for me? What did not work for me? Additionally, I have to remain aware that not all students learn the same as I do, and that I need to adjust my teaching methods to try and reach as many students as possible in each class. This requires me to experiment with new techniques or lesson formats as well as careful evaluation of how successful a lesson or course turned out to be.

Assessing students' understanding through assignments and exams is one way to do this, but I also want to maintain an open dialogue with my students about course material as the course progresses. For this reason, soliciting feedback in the middle of the course (after learning a new block of material, say) has become an important practice for me to learn more about how well my students are responding to the teaching methods that I have chosen.

Teaching Methods

As a graduate student, I have worked as a TA for four semesters. My teaching responsibilities were to lead discussion sections and to supervise laboratory experiments. In discussion sections, I have used brief lectures to review the physics concepts recently covered in lecture. After the short lecture, I assign practice problems to my students and let them work on their own. By walking around the classroom and seeing how students are approaching problems, I can get a sense of how well they understand the material.

Recently I have learned a lot about peer instruction and other active learning techniques that give students opportunities to receive feedback from both the instructor and their peers. For an in-class problem, the instructor begins by asking a question, and students take a short time to come up with what they think is the right answer. Students then discuss their answers with one another. Following the discussion, students report back their new answers. (See an example here.) This procedure has proven to be very effective at getting students to reconsider their assumptions and practice their reasoning. Given the opportunity to design and teach a full course in the future, I intend to experiment with peer instruction to see how students respond.

Post-Semester Student Evaluations

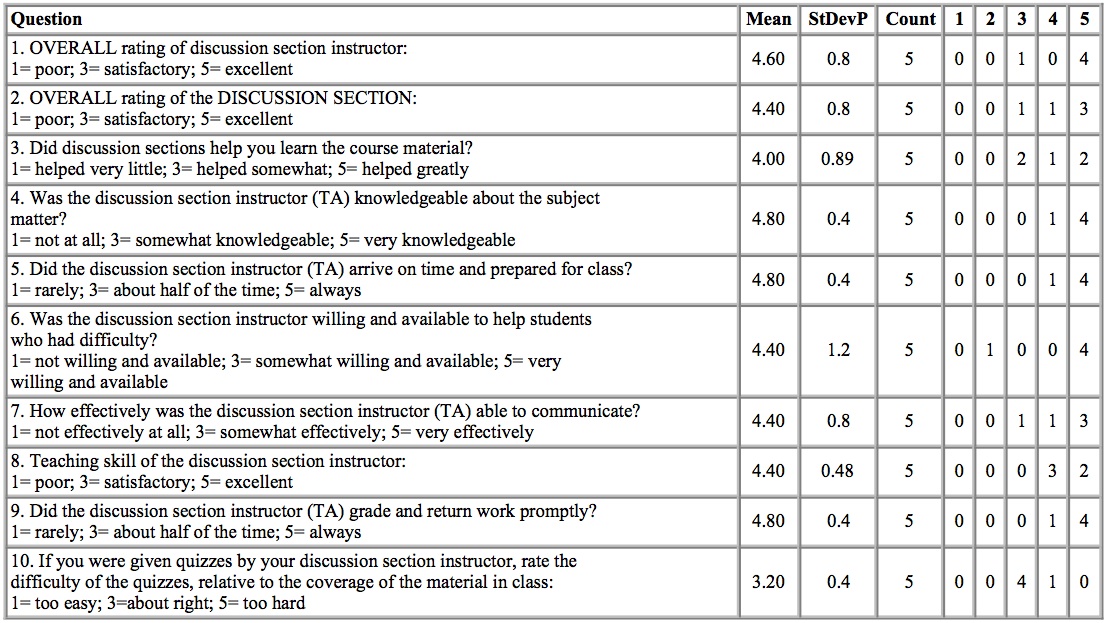

Below is one of the end-of-semester course evaluations that I received from my students as a teaching assistant for Physics 1116: Mechanics and Special Relativity in the Fall of 2015.

Student comments

- "Daniel is extremely nice and very helpful. He is also available for help and is very understanding of everyone in the class having different expectations of what they want from the course. He is fairly approachable and good at explaining things, even if he has to think of a different way to present something because you didn't understand the first [time]."

- "Daniel should be a professor."

Annotations

-

Utility to Me

End-of-semester course evaluations are useful for some things - I can see some evaluations of general aspects of the course and whether students thought that I handed them well. Here, for example, I can see that students did not think that the quizzes I assigned during discussion section were appropriately difficult - this is something that I can re-examine and improve upon the next time I design quizzes or exams.

There is a lot more information about my teaching, however, that I would like to find out from my students that is not covered in this evaluation. Many of the questions are more related to my presence in the classroom than to how effectively I managed to convey the course materials. A student who left this course fundamentally confused about special relativity, for example, might still say my skills as a teacher deserve a "5."

Furthermore, it is a little disappointing to see that only 5 out of the 19 students in my discussion section responded to this survey. The sample size is too small to judge whether it represents the general attitude of all students in the class as a whole. (Currently, Cornell does not incentivize students to fill out course evaluations, so I can really only expect a few students to do so.)

-

Utility to Students

End-of-course evaluations are also not particularly useful to students. It is unlikely that they will see the benefits of any changes that I make to future courses based on their feedback here.

Much more useful to the students themselves would be mid-course feedback. As an instructor, I can ask students questions specific to the content and structure of my course. Instead of only asking "how is my teaching?" I can ask questions such as, "Would you like me spend more time lecturing, or would you prefer more time to study and practice with the material on your own?" or, "Is there a topic that you feel we spent an insufficient amount of time studying?" With these mid-course evaluations, I as the instructor can choose to change the course according to what the students need. After all, I believe that time is wasted on long derivations copied from the textbook, but it may be that in my next course the students will desire a more mathematically rigorous curriculum and want me to explain those proofs in greater detail.

-

Experimenting with Mid-Course Evaluations

In the Spring of 2016, immediately after the first prelim exam, I asked my students to turn in anonymous feedback on how they felt course was going. I asked them four questions:

- What actions did you take to prepare for the test?

- How prepared did you feel while taking the test?

- Did any of the questions surprise you?

- Is there anything you would like to change about the course?

With the first three questions, I wanted students to reflect upon how they studied for the exam, as well as whether or not they felt that they had prepared enough. Interestingly, most of the students did say that they felt sufficiently prepared and that none of the questions surprised them, even though the majority of the class failed to solve one of the test questions correctly - to me this indicated that the physic concepts related to that question that needed some reinforcement.

For the last question, a majority of students all said the same thing: they wanted less time spent deriving formulas, and more time spent discussing applications of the concepts. They felt unprepared to work on their homework sets, as they would often spend lecture learning where an equation came from but not learn any ways to actually calculate something with it. (This can be particularly daunting in any course that uses Maxwell's equations, which are straightforward to write down but difficult to use.) Subsequently I relayed this information to the lecturer and also adjusted the problems covered in my discussion sections to connect more explicitly to the theorems from lecture.

Overall, this experiment with implementing mid-semester evaluations into the format of the course helped me to better understand how my students were learning and what I could do to be more effective. I will definitely continue to solicit evaluations and feedback from students in future courses.